import numpy as np

from scipy.stats import norm,uniform

import matplotlib.pyplot as plt

import seaborn as sbn

from scipy.optimize import minimize

import pandas as pd

import warnings

warnings.filterwarnings('ignore')

sbn.set_style('white')

sbn.set_context('talk')

Properties of MCMC#

In this workbook, I will briefly go over the theory of MCMC. We’ll cover questions like why does MCMC work (why does it allow us to draw samples from the posterior) and what conditions are necessary to get this result.

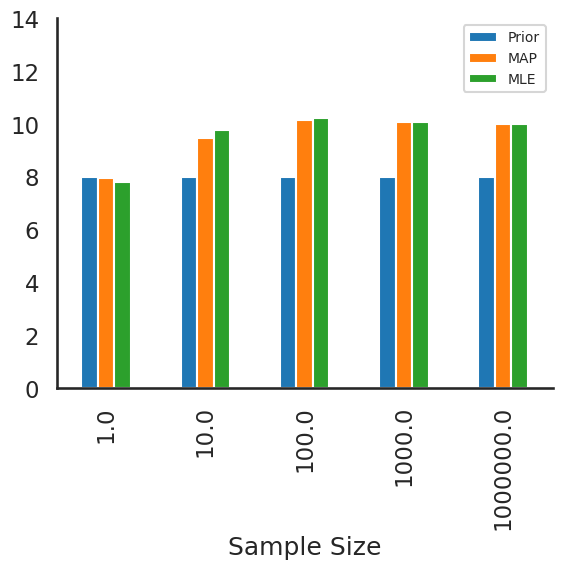

Asymptotics#

A decent starting point is to go back to our simple example using conjugate priors and analytical Bayes. Our data is distributed normal with mean \(\mu\) (unknown) and standard deviation equal to \(\sigma\) (known). We have priors describing our beliefs about the distribution of \(\mu\): distributed normal with mean \(\mu_0\) and standard deviation \(\sigma^2_0\). In this setting, our posterior can be solved for analytically as:

How might we expect the posterior to behave as sample size get increasingly large? First examine the mean of the analytical posterior:

which means that the mean of the analytical posterior will converge on the Maximum Likelihood Estimate of the mean: the data completely dominates the prior.

What about the variance? Doing a similar excercise shows that

So, the asymptotic properties of our Bayesian estimator, exactly parallels the frequentist worldview: our posterior collapses to the Maximum Likelihood Mean with zero variance for super huge sample sizes.

We can also see this using numerical methods and revisit our example from 2 worksheets ago:

Mean of data is unknown, variance is known (\(\sigma=3\))

Priors \(\mu_0 = 8\), \(\sigma_0 = 2\)

# plot the MLE mean, and MAP estimate at different sample

# sizes of data given below

sample_size = [1,10,10**2,10**3,10**6]

sigma = 3

mu_0 = 8

sigma_0 = 2

Define the negative log likelihood function (we need to return the

negative for using the scipy minimize function to find MAP:

def log_posterior_numerical(mu,data,sigma,mu_0,sigma_0):

log_prior = norm(mu_0,sigma_0).logpdf(mu)

log_like = norm(mu,sigma).logpdf(data).sum()

return -1*(log_prior + log_like)

For for each sample size, generate a dataset and calculate MAP and the MLE estimate for the mean which is just the mean of the data. Then store the results:

results = np.zeros((len(sample_size),4))

for idx, i in enumerate(sample_size):

data = norm(10,3).rvs(i)

# for this dataset, solve for map

res = minimize(log_posterior_numerical,np.mean(data),

args=(data,sigma,mu_0,sigma_0),options={'disp': False},

method='BFGS')

results[idx,:] = [i,mu_0,res.x[0],np.mean(data)]

# convert to a pandas dataframe

results=pd.DataFrame(results,columns=['Sample Size','Prior','MAP','MLE'])

# plot results

plt.figure(figsize=(12,10));

results.set_index('Sample Size').plot(kind='bar');

sbn.despine();

plt.ylim(0,14);

plt.legend(loc='upper right', fontsize=10);

plt.show()

<Figure size 1200x1000 with 0 Axes>

Back to the Properties of MCMC#

This section draws very heavily from Patrick Lam’s excellent course notes on this topic here

The last notebook asserted that by using the Metropolis Hasting algorithm to construct a Monte-Carlo Markov Chain, is akin to drawing randomly from the posterior distribution of the parameters. Why is this the case and what conditions do we need for it to hold true?

There are proofs showing that with appropriate choices of the proposal distribution \(q(\theta^P_{t+1},\theta_t)\), the sequence of values we construct using MH is a markov chain approximately equal to taking random draws from the posterior: \(p(\theta|\mathbf{y,x})\) [see page 124 “Contemporary Bayesian Econometrics and Statistics”, John Geweke].

A Markov Chain#

is a stochastic process in which future states are independent of past states given the present state

stochastic process: a consecutive set of random quantities defined on some known state space \(\Theta\)

\(\Theta\): set of all possible parameter values

consecutive implies a time component defined by \(t\)

The Strong Law of Large Numbers (SLLN)#

Let the sequence \(\theta_1,\theta_2,\ldots,\theta_N\) be iid distributed random variables where \(E(x_i)=\mu\). Then we know that

Note: N is chain length, not the size of your dataset

So think of these \(\theta_i\) as being draws of parameter values from the posterior. Remembering back to last time, we see that there is likely to be autocorrelation, so that our sequence isn’t totally iid. MH samples (if done correctly) are nearly independent but not completely independent from one value in the sequence to another.

Markov Chain Dependence and the Ergodic Theorem#

Since the sequence of parameter values generated by MH are not independent, we can invoke the Ergodic Theorem to be confident that our sequence can be used for inference.

Let \(\theta_1,\theta_2,\ldots,\theta_N\) be N values from a Markov chain that is aperiodic, irreducible, and positive recurrent then the chain is ergodic and \(E[\theta] < \infty\), then we know that

where \(\pi(\theta)\) is the probability of transitioning from to another state given a current state (where state is defined by the current value of our parameters). In a MH context, this transition probability is governed by the MH accept reject criteria. This also generalizes to other moments, like standard deviations, etc.

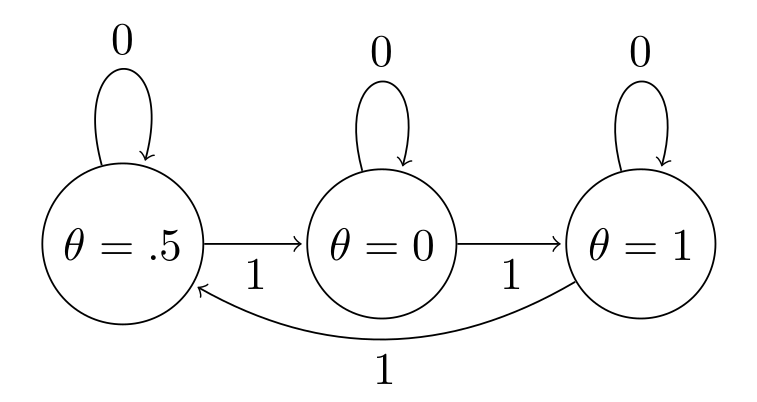

Aperiodic#

We focus on explaining this concept using a discrete state space. For most of our models, the state space for parameters is continuous. Still the requirement of aperiodicity applies. Focusing on the discrete state space example helps to understand what this assumption means for our samples (markov chain).

Definition of aperiodicity for a discrete state space:

Compute the number of steps required to return to a particular value in the chain

Find the maximum integer value that divides evenly into the steps computed above, call it \(d\)

If \(d\) = 1, the sequence (the Markov Chain) is said to be aperiodic.

The following shows a periodic sequence of values, where \(\theta\) always cycles in order \([.5,0,1]\)

Fig. 4 Aperiodicity#

Here, \(d=3\) since there is only one path, having started at \(\theta=.5\) to return to \(.5\): the sequence \([.5, 0, 1, .5]\), requres three moves (samples) to return to the value. So the chain is periodic.

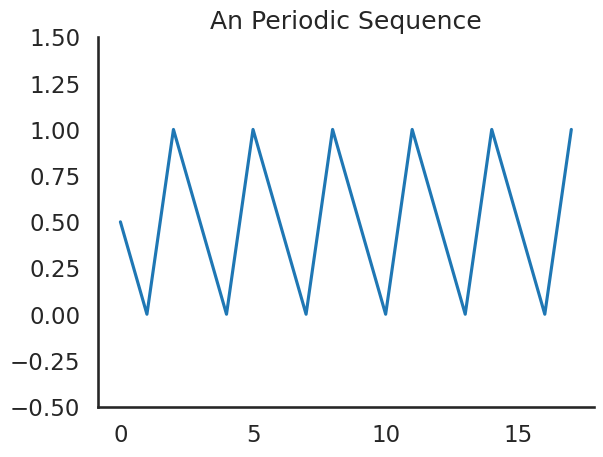

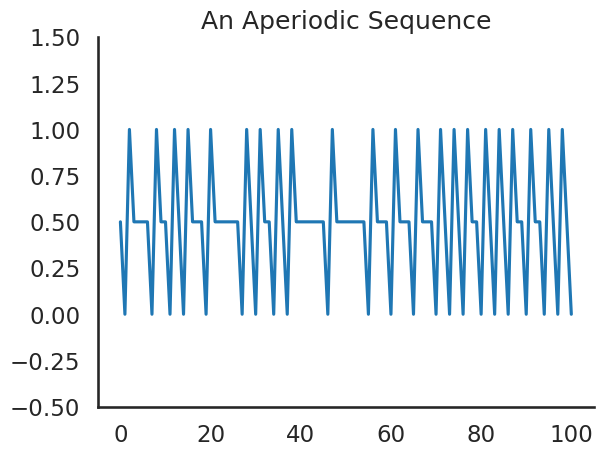

Here is the traceplot for this periodic chain:

theta = [.5,0,1]*6

time = np.arange(3*6)

plt.plot(time,theta)

plt.title('An Periodic Sequence')

plt.ylim(-.5,1.5)

sbn.despine()

plt.show()

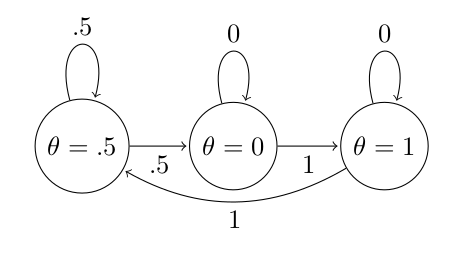

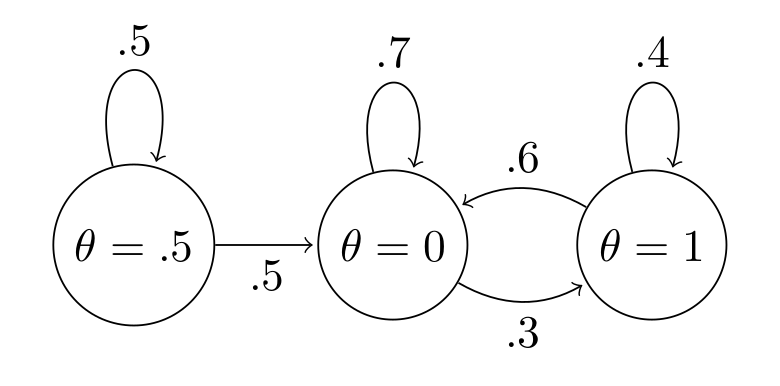

An easy way to break periodicity is to allow some chance that values can repeat in the chain, like this:

Fig. 5 Periodicity with Chance to Repeat Values#

Now there are two sequences that having started at \(\theta=.5\) to return to that value. For example, here are some sequences:

Now the number of moves is \([1, 3]\), making \(d=1\), therefore we have an aperiodic sequence.

theta = .5

chain = [theta]

for i in range(100):

if theta == .5:

u = uniform.rvs()

theta = (u >= .5) * .5 + (1- u < .5) * 0

elif theta == 0.:

theta = 1.

elif theta == 1.:

theta = .5

chain.extend([theta])

plt.plot(chain)

plt.title('An Aperiodic Sequence')

sbn.despine()

plt.ylim(-.5,1.5)

plt.show()

The Metropolis Hasting algorithm application of a non-zero chance of repeating values helps ensure aperiodicity.

Remember, failing the aperiodicity assumption means that we cannot use our markov chain for any statistical inference, since we aren’t guaranteed that it is capturing the true underlying posterior.

Irreducibility#

A Markov Chain is irreducible if it is possible to go from any state to any other state (doesn’t have to be in 1 step).

This chain is reducible, since once we transition away from \(\theta=0.5\), we never go back:

Fig. 6 Irreducibility#

The problem is again, if our chain is reducible, we can’t rely on the ergodic theorem to allow us to use our constructed chain for inference. This one is much more difficult to visualize since something that looks reducible might re-occur if we persisted with a sufficiently long chain length.

Positive Recurrence#

For positive recurrence, we need the time it takes for the chain to return to some value to be finite.

Example. Suppose we are modeling the mean of a dataset as we have been doing. A candidate value that is included in our chain is -10. This value is very unlikely given our data:

norm(-10,sigma).pdf(data)

array([2.69339866e-11, 1.99728211e-07, 7.72499391e-14, ...,

1.47919970e-13, 3.75071205e-09, 8.96710668e-10])

We do however, need for MH to have some positive probability of returning back to this value even if it is very small. And MH does this because of the accept-reject criteria it employs- inferior values of our parameter vector are sometimes included. So while it may take a very long time for the chain to return to -100 it will eventually (probably an extremely long time).

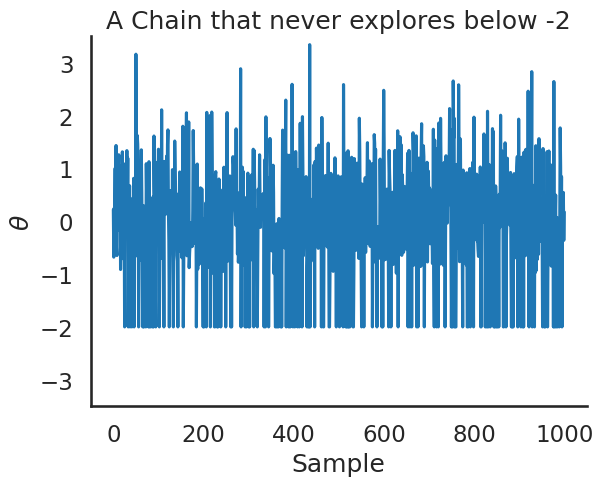

Chain Pathologies#

Ergodicity ensures that we explore the entire parameter space and never get “stuck” in any given place. Sometimes however, we have a breakdown in the MCMC process where our chain is limited and can’t fully explore \(\theta\). The following example shows a case where our chain is never allowed to go below -2. It is easy to visualize the problem simply by examining the trace plot.

bad_chain = norm().rvs(1000)

bad_chain[bad_chain<-1] = -2

plt.plot(np.arange(1000),bad_chain)

plt.ylim(-3.5,3.5)

plt.xlabel('Sample')

plt.ylabel('$\\theta$')

plt.title('A Chain that never explores below -2')

sbn.despine()

plt.show();

Without getting too much in the weeds at this point, it may be possible to transform the parameter in the above case to sidestep the problem we can see above.

Without ergodicity you are screwed#

If we can’t assume ergodicity, then we can’t use our chain for inference. You might be able to resort to practices like thinning to whinnow your chain down to something more like iid (and then rely on SLLN), but it will be difficult to convince your audience and it will require running very long chains.

MH MCMC Inference#

Having established these three properties and having used random-walk MH, we are assured that our chain represents somewhat correlated random draws from the posterior that can be used for all manner of inference:

Means

Credible Intervals

Medians

Standard deviations

Provided that our chain has converged to the limiting distribution, which is a numerical issue.